Mental Health Tech: Does it still suck?

A deep dive into the current mental health startups' ecosystem, from a Seeker & Therapists' perspective

A Bittersweet Pandemic

Each day of 2020, and 2021 was more or less the same, trapped inside our homes with the only real exposure to the outside world being through screens. No choice to socialize left me with ample time for introspection and reflection

I wanted to commit myself to Therapy but had to wait for a couple of months (well it was more than that :/ ) to save up enough through internship(s) for getting a private therapist. Even then I didn’t because it is still very much a taboo in Indian society, I hope penning down my experience helps in reducing the stigma attached to it.

The last straw was when I started flinching in early 2022 and consulted a therapist through a friend’s recommendation. It is a tough ride in the beginning but trust me it gets better eventually

"Hope is being able to see that there is light despite all of the darkness." - Desmond Tutu

The World Health Organization (WHO) estimates that more than 700,000 people globally died by suicide in 2019, making it a more common cause of death than malaria, HIV/AIDS, or breast cancer. Suicide already was the second leading cause of death for children. We had already seen pretty high levels of substance use, depression, and violent behavior. But the pandemic has been a perfect storm of a stressor, which we know leads to a really notable increase in psychological symptoms among kids.

We’re seeing a real reduction in emotional intimacy among kids because so much of their communication is now electronic, So there’s not an opportunity for disclosure and support and feeling like you’re able to really represent yourself in an authentic way.

Still, in a world where 1 in 7 people between 10 and 19 years old experiences a mental disorder, according to the World Health Organization, not all are able to access the help they need. Not getting healed at an early age can lead to huge baggage with the issues only worsening with time.

A Mental Health epidemic was already underway ( decreasing social affinity, disrupted family structure, purposeless jobs, sedentary lifestyle etc. ) but pandemic helped expose the worst side of it

Let’s dive into the broad categories of current tech-enabled solutions:

( I will loosely use the term Therapist to include Counselling Psychologist / Clinical Psychologist / Rehabilitation Therapist / Psychiatrist / Psychotherapist )

Single Player Apps

Headspace ( Series C, Total Funding: $215.9M, Valuation: $3B ) / Calm ( Series C, Total Funding: $218M, Valuation: $2B ):

Teaches users the art of meditation and how to deal with stress

Provides mindfulness and breathing techniques training through guided meditation and chats with calming music in the background to help users take care of their mental health and improve their quality of sleep

Kona ( Total Funding: $4M Seed ):

Burnout prevention platform for remote teams through constant monitoring, wellness check-ins, built-in alert for managers, and an AI chatbot. Built for Slack

Subscription-based access model for unlocking entire suite of tools

Some valid criticism of the new wave of such apps: here

Aggregators

BetterHelp ( acquired by Teladoc ) / Talkspace ( $300M Post IPO Equity ) / Brightline ( Series C, Total Funding: $212M, Valuation: $705M ):

Uberisation of Online Therapy

Cognitive behavioral therapy ( Talk therapy ) which is an evidence-based treatment is followed

Focus on B2B / B2B2C rather than B2C

Alma ( Series D, Total Funding: $220M, Valuation: $800M ):

Provides affordable mental health care

Most therapists at Alma accept insurance. User can simply select their insurance provider to see available therapists who offer in-network care

B2B / B2B2C models drive revenue due to a multitude of factors but not limited to:

Selling to businesses isn’t very difficult since most firms have budget allocations for Employee Assistance Programmes already in place

Subscription Model:

A steady state of revenue since these platforms charge employers/schools a monthly subscription fee to access the platform’s therapists based on their total headcount

Higher Customer LTV:

A good proportion of employees don’t need the services provided by their EAP but have been factored in the pricing regardless

There is an upper limit on the number of therapy sessions an employee can have on the platform each month

Higher Retention:

Principal-agent problem: An employee doesn’t have control over what mental health platform they can choose for their EAP hence better user retention for such platforms

Unique Problems with B2C:

Therapy is an expensive commodity as you are paying for someone’s time. Some therapists do provide sliding scales to assist students in availing therapy

Getting the costs covered under insurance is a significant hassle with guidelines from GOI released very recently:

All health insurance policies must, starting on October 31, 2022, include coverage for mental diseases. "All insurance plans must cover mental illness and adhere strictly to the requirements of the MHC Act, 2017

High Take Rates:

Therapists getting their client flow through these platforms face a good cut in pay as opposed to those coming through their private practice, often facing burnout due to insufficient gaps in schedule for their own therapy. The situation is even worse for School Counsellors who are burdened with admin work ( in India )

Establishing one’s private practice is a humongous task due to:

High upfront costs for instance office space

Self-promotion of mental health therapy services seems unethical. Mostly catering to networking-based clientele, getting them other than word-of-mouth needs a huge investment in marketing

AI Chatbots

Wysa ( Series B, Total Funding: $29.4M ):

Conversational AI for mental and emotional wellness, NHS (ORCHA) certified, FDA Breakthrough Device Designation

Employs Natural Language Understanding (NLU) to support users through interactive conversation and offer guidance based on cognitive-behavioral strategies that have undergone clinical examination

Concerns:

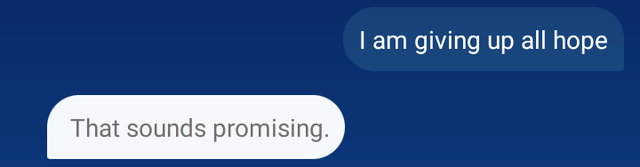

Reddit is full of countless examples of Chatbot’s unjust responses to certain critical situations

source: reddit

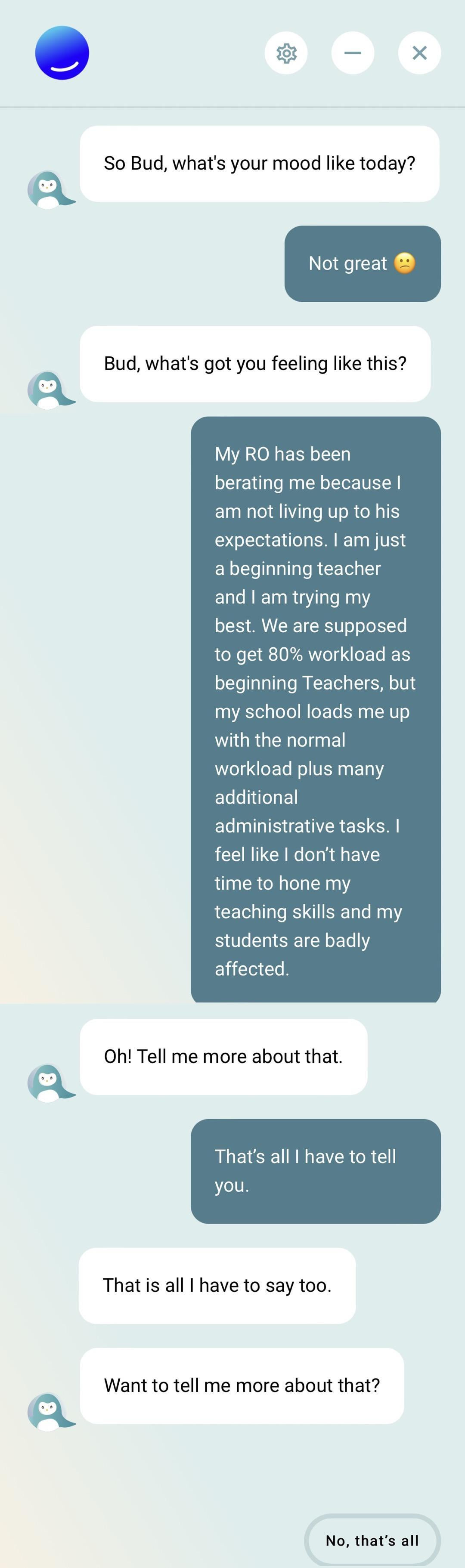

source: Reddit Talking to a bot is not engaging at all and lacks human compassion. Subconsciously you would be aware that it’s just a conversation with a machine trained on certain data

source: Reddit Therapists use various signals for diagnosis apart from simply the content being spoken for instance attention, tone, body language, assessing between a Neurotypical vs Neurodivergent person, etc. which is impossible to nudge in a text-only format

source: Reddit

Potential Applications:

Reduced Patient Wait Times:

For many years, lengthy NHS wait times have become the norm for Brits. But the COVID backlog has led to a substantial rise in the number of patients on waiting lists:

source: NHS

Prescription AI CBT at the moment of referral lessens anxiety and depressive symptoms

Wysa can reliably identify when individuals are dealing with more serious mental health issues and automatically identify those who require more intensive or urgent care, therefore, increasing the productivity of specialist consultants

ChatGPT enabled Bot:

Machine Learning Enabled Tools

Kintsugi (one of my favs 🫶🏻, Series A, Total Funding: $29.7M):

API-first platform that uses novel voice biomarkers in speech to identify, prioritize, and care for mental health in real-time

Integrates with call centers, telehealth systems, and remote patient monitoring apps to help more people get the right care at the right time

Wrapping Up

Therapy is most effective in a 1-on-1 format since a human touch and connection are an integral part of the process.

We get to be entirely flexible and work creatively to hold a truly person-centered approach - committed from the moment we start with each patient

The perfect use of apps is self-help and monitoring behavioral change in addition to a therapist. eg. allowing AI to prompt therapists to check in on their patients early in a depressive episode or psychotic break. These alerts could also be used to encourage patients to take action when their mental health takes a turn for the worse, even going so far as to provide “just-in-time” interventions to people at risk of attempting suicide.

If someone decides to download an ineffective app instead of seeking care, this is a lost opportunity to make progress on whatever the issue is as well as a risk the problem worsens without effective care. The even worse danger is that an untested app has unintended consequences that make the situation worse

Prevalence of Untested and Unregulated Apps:

43% or 26 of the schools included app suggestions on their website, with up to 218 unique apps suggested. However, 60 of the apps were discontinued (no longer available in the App Store or Google Play Store) and only 44% had been updated in the past 6 months. Additionally, 39% had no privacy policy, and of those with privacy policies, 88% collected user’s data and 49% shared that data with third parties. Finally, actual peer-reviewed efficacy studies had been published for only 16% of the listed apps (source)

Closing Thoughts

How to build a value-based health tech system as opposed to the conventional price-based one? The latter has misaligned incentives (leading to unnecessary billing from medical institutions etc.) promoting a trust deficit from the consumer side

Psychedelic Psychotherapy has a huge potential ( Talk Therapy is nothing but assisted self-discovery in itself ) but the War on Drugs is a huge topic hence leaving it for some other time :)

source: Reddit

If you are working/building in the Mental Health space or a Startup in an entirely different sector then feel free to reach out to me for suggestions/collaborations.

This is the first issue of Startup Traphouse, more are already in pipeline. I am pretty active on Twitter, do give a follow at OffWhite_Quant

Liked how you mentioned about the taboo prevalent in society... A startup about mental health cannot unleash it's true potential unless the founders are aware of each and every aspect in the serviceable addressable market... The inclusion of ChatGPT might be a game changer but there will always be some inherent bias in its training data too